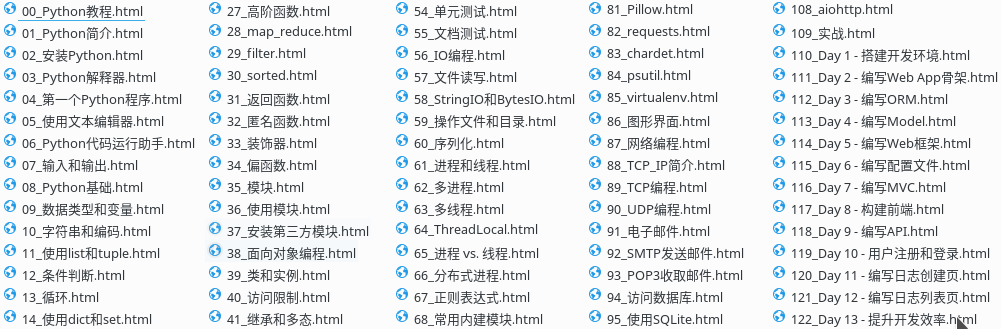

爬取的是 廖雪峰的 python 教程 ,参考的是 Python 爬虫:把廖雪峰教程转换成 PDF 电子书

第一次接触爬虫,一直觉得爬虫很神秘。接触之后才发现,其实之前就有部分接触过,啊哈哈哈~

爬虫我的理解就是请求+解析,其实跟一般的 web 编程没有太大的区别,只是更侧重于请求方面,当然,有些情况不需要解析。

爬虫工具

传闻 requests 和 BeautifulSoup 是爬虫的两大神器,还有一个知名的框架 scrapy。当然,还有 python 执行 js 的工具 PyV8,模拟浏览器的组合 selenium+phantomjs 和 selenium+chrome/firefox。工具很多,可以慢慢玩。

这里使用的有 requests ,BeautifulSoup ,pdfkit 。其中 pdfkit 需要安装 wkhtmltopdf ,windows 的朋友可以直接下载安装最新版,linux 的朋友建议安装 0.12.3.2 的版本(截止到今天)。

1 | $ sudo apt-get install wkhtmltopdf=0.12.3.2-3 |

廖雪峰的官网反爬机制

爬虫侧重于请求

尝试请求

如果请求不正确,会返回 503。这个 http 状态码意味着服务器处理不过来或者拒接客户端的连接。

1 | >>> r = requests.get("https://www.liaoxuefeng.com/wiki/0014316089557264a6b348958f449949df42a6d3a2e542c000") |

直接使用浏览器访问,请求头:

1 | Request Headers |

请求失败的原因

从请求头中观察出,有以下几点:

- 模拟浏览器 User-Agent: ….

- 请求从哪里跳转的 Referer: …

- Cookie 设置

前面两点都简单,只要加上请求头就行了。只有 Cookie 比较难,因为是在 js 中设置的 cookie,但是接下来分析完,就会发现也很简单,简直易如反掌。

设置头部信息

1 | headers = {"User-Agent":"Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/59.0.3071.115 Safari/537.36", |

分析 cookie 设置

分析的过程写起来很简单,但是花的时间挺长的,做了各种尝试~

在页面中有一段,这个是设置 cookie 的关键:1

2

3

4

5<script>

(function () {

eval(decodeURIComponent('%69f%28%21get%43%6F%6F%6B%69%65(%27%61tsp%27))%73%65%74C%6F%6F%6B%69%65(%27%61tsp%27%2C%20%271518835160255%5F%27%2Bnew%20Date%28%29.getTime%28%29%2C%20580%29%3B'));

})();

</script>

Chrome Console 运行如下:1

2> decodeURIComponent('%69f%28%21get%43%6F%6F%6B%69%65(%27%61tsp%27))%73%65%74C%6F%6F%6B%69%65(%27%61tsp%27%2C%20%271518835160255%5F%27%2Bnew%20Date%28%29.getTime%28%29%2C%20580%29%3B')

<- "if(!getCookie('atsp'))setCookie('atsp', '1518835160255_'+new Date().getTime(), 580);"

即如果没有设置 cookie: atsp,那么设置 cookie

在 Sources 有一段 js:1

2

3

4

5

6

7

8

9

10

11<!-- 在这个js里面 https://cdn.liaoxuefeng.com/cdn/static/themes/default/js/all.js?v=1b39f7c

-->

function getCookie(e) {

var t = document.cookie.match("(^|;) ?" + e + "=([^;]*)(;|$)");

return t ? t[2] : null

}

function setCookie(e, t, n) {

var i = new Date((new Date).getTime() + 1e3 * n);

document.cookie = e + "=" + t + ";path=/;expires=" + i.toGMTString() + ("https" === location.protocol ? ";secure" : "")

}

很明显了,只需要获取到传进来的参数 t 就解决问题了。然而,通过敏锐地观察,参数可以不用获取,完全可以自己去设置:

Chrome Console 下运行:1

2> getCookie('atsp')

<- "1518837501367_1518837501845"

“_” 左边的参数几乎等于浏览器调用 js 的本地时间戳 (new Date().getTime()),所以获取 cookie 就可以这样:1

2

3def get_cookie():

timeStamp = str(int(time.time()*1000))

return "atsp=" + timeStamp + "_" + timeStamp

cookie 中还有 Hm_lvt 和 Hm_lpvt,同样可以分析出来,这个是跟百度有关的,具体有什么作用就没有去研究了,可能跟访问量有关?反正不用理会。

那么爬取就变得易如反掌了~

代码片段

这里不用 session 也可以,完全没有用上 session 的功能嘛~~~

代码写的很糙,有时间重构了再贴上新的代码。代码可以参考前中的链接1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55def get(url):

"""

get 请求,返回 response

"""

headers["Cookie"] = get_cookie()

session = requests.session()

response = session.get(url, headers=headers)

response.raise_for_status()

return response

def get_cookie():

timeStamp = int(time.time()*1000)

return "atsp=" + str(timeStamp) + "_" + str(timeStamp)

def parse_url_to_html(url, break_point):

"""

获取 url 的内容,并解析写入到文件中

"""

global count

if count <= break_point:

count += 1

return True

response = get(url)

soup = BeautifulSoup(response.content, "html5lib")

content = soup.find_all(class_="x-content")[0]

html = str(content.find_next("h4")).replace("h4", "h1") + str(content.find_all(class_="x-wiki-content")[0])

html = html_template.format(content=html)

with open("html/{}{}.html".format(str("%02d" % count), prety_file_name("_" + content.find_next("h4").getText())), 'wb') as f:

f.write(html.encode())

count += 1

if count%5 == 0:

time.sleep(4)

def save_pdf(htmls):

"""

把所有html文件转换成pdf文件

"""

options = {

'page-size': 'Letter',

'margin-top': '0.75in',

'margin-right': '0.75in',

'margin-bottom': '0.75in',

'margin-left': '0.75in',

'encoding': "UTF-8",

'custom-header' : [

('Accept-Encoding', 'gzip')

],

'cookie' : [

('cookie-name1', 'cookie-value1'),

('cookie-name2', 'cookie-value2'),

],

'no-outline': None

}

file_name = "pdf/test.pdf"

pdfkit.from_file(htmls, file_name, options=options)

最后,新春愉快